Tips for developing robust batch jobs

Posted on March 23rd, 2015 in Data Integration, Tip by Kornelius

Even in the era of realtime web applications and software as a service hosted in the cloud there is still a need for silent batch processing running in the background. The proof for this statement is made by the JSR 352: Batch Applications for the Java Platform. As a part of the Java Enterprise Edition 7 this standard provides a consistent model for batch job processing.

The major problem with background processes is that negative effects for end users typically do not show until the faulty jobs have been running for some time. At that point it might already be too late to catch up. As batch jobs process masses of data, it isn’t always possible to make up for the lost computation time. In this article we provide some hints, how to develop your batch jobs in a robust and reliable way, so that they do their daily work in a live system.

What is a batch anyway?

Batch processing has a long history in electronic data processing (EDP). A batch consists of similar orders that are processed in sequence or in parallel without user interaction. It typically fulfills recurring and business critical tasks. One can differentiate between three ways to start a batch job:

- Time: triggered by a defined schedule, e.g. each minute, each day or every month

- Event: triggered by an external dependency, e.g. a new file has been created

- Manually: triggered by user request

Batch jobs are suitable for long-living, compute-intensive tasks and mass data processing. Single jobs can be stopped during execution, their state can be saved at checkpoints and orders can be partitioned and executed in parallel as well.

In the context of Enterprise Content Management batch jobs are typically used for archiving, format conversion (e.g. pdf generation) and the general integration of input and output interfaces. These are operations that are not visible for a user, often even run asynchronously to user events and are essential for reliable business processes. Each batch job can be seen as a cog, which needs to play its part to keep the clockwork running.

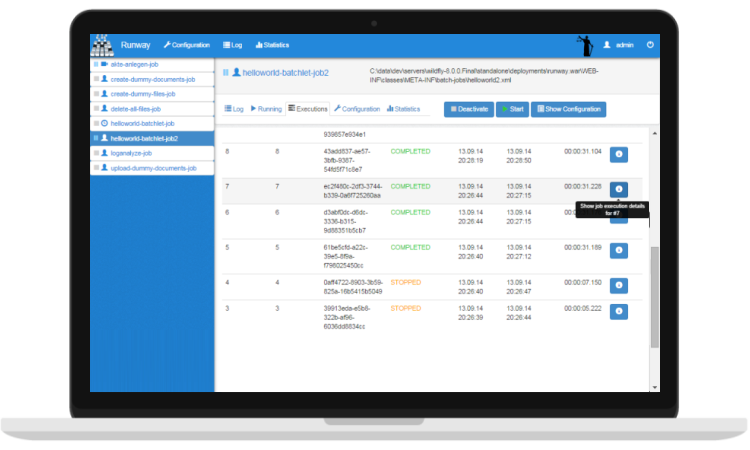

Our product Runway helps you to manage processes and keep the upper hand in daily operations. Although management tools like Runway make it easier, they cannot completely prevent that developers implement processes which are not error tolerant or waste system resources. If you are a developer, you should consider the following tips to ensure the robustness of your batch processes and make the daily operations easier for your colleagues and support staff.

1. Comprehensive consistency in process operations

The processing of similar process operations should utilize the same components. As an often mentioned advantage of object-oriented design this shouldn’t be news for you. Abstract components should be created, dependent on the expected use cases, which may be reused for multiple processes. This eases the implementation of new processes and ensures that batch jobs are consistent in their behavior. The complexity and thereby the maintenance and documentation effort is reduced.

Imagine a group of jobs, which wait for events from the file system. As soon as certain signal files are created in the monitored directory, the job execution starts. The status of the order is managed by the file extension of the signal file and hence can be monitored in the file system. A consistent status model with file extensions for ready, working and error status is needed for every process. For this use case Runway e.g. provides a FileSystemSemaphore, which sets a lock on the orders signal file and manages the file extensions uniformly by publishing certain methods to allow changes on the order status.

With such a helper you can implement a consistent state machine without much effort for all processes that use file based order processing.

2. Use expected preconditions defensively

Every developer makes implicit or explicit assumptions about the state of the program flow at certain lines in the code. In practice there are only a few real invariants, which never deviate from the expected value range. It is therefore recommended to explicitly check preconditions in your own code and hence confirm or disconfirm them. This is also known as Defensive programming.

Especially at the interface between job-steps it is reasonable to check the passed in data on correctness. Are all needed metadata (schema-)valid? Does the document have the expected format? Is the output stream still writeable? A quick analysis of the given data does not hurt. That way you prevent unexpected Exceptions and can provide helpful information in the log file, why an order couldn’t be executed correctly. A helpful tool to check on preconditions is the Guava library.

If operations are hard to undo in particular, you should mistrust every assumption. The performance losses for extended checks can most times be neglected. For time critical processes you should focus the checks at least on the spots with the highest risks.

3. Plan exit-points for a graceful shutdown

Depending on the use case of executed operations a batch job may have a very long runtime for a single transaction. If the execution environment receives an exit signal during this runtime or the process is stopped, the job should be able to exit in an orderly fashion. This requires that the developer defines exit points that do not leave behind an undefined state. A check for a shutdown condition can e.g. ideally be placed as a loop condition, if the loop body is expected to be long-running.

It all boils down to proper transaction management. The developer needs to ensure that transactions don’t excess the shutdown waiting time. Single job steps should be structured lean, so that a job execution might conveniently start over at every intermediate step. This ensures a robust restart und prevents messy remnants of failed executions, which are cumbersome and annoying to remove.

4. Pay attention to parallelism

If jobs are executed in parallel, care has to be taken that the jobs do not have unmanaged dependencies with another. Otherwise the execution is prone to race conditions that cause errors which are hard to find and hard to reproduce reliably. An important construct to prevent this, is to identify a unique token, which indicates if an order for a specific entry is already being executed.

As an example it is conceivable that a classification criteria is chosen during the execution of order objects, which isn’t assigned redundantly. If multiple orders are executed in parallel, there is a risk that a pre-examination is evaluated positive for all orders and they get the same criteria. To prevent this, a proper mutex handling has to be implemented.

If orders need to be executed in a specific sequence, the easiest solution is to go without the advantages of parallelism and queue the orders and execute them one after another. Only if performance is a critical success factor, the complexity of partial parallelism should be accepted in this scenario.

5. Modularity ensures testability

Job steps should be regarded as single modules with narrowly defined input and output parameters to ensure a high testability. Every step therefore receives a kind of initiator that fills its parameters during execution with the given context. During a test case this initiator can be executed separately from the other parts of the batch job as required. A helper function that returns the output elements eases the check for assertions in unit and integration tests.

The more abstract an execution step is separated from the specific execution context, the fewer dependencies need to be filled in the corresponding test case, which should be the target for unit tests. If the needed level of abstraction cannot be reached, the specific execution context needs to be simulated in the test execution, for which tools like Arquillian exist.

6. Uniqueness of orders

For an easy traceability of single orders a unique identification criteria is needed. In the case of an error the concerning order should be easily identifiable by support staff. A UUID is the tool of choice, to make a job unique and provide a persistent information. Ideally this cryptic UUID should be extended with characteristics that have subject-specific meaning. It is much easier for support staff to identify an order and speak about it with an end user e.g. on the basis of a document type or a textual subject.

7. Logging for error analysis

A protocolling with standard log mechanisms like log4j facilitates the automatic evaluation and offers a multitude of desired output formats. All log levels should be utilized. A common use case is to write information even on the DEBUG-level in a separate log file to monitor the correct execution during the first days of productive operations. If an error occurs in this scenario the full log information is present. In our experience this high log level is justified for a short introduction period, if there might be different application behavior due to varying configuration of the productive environment compared to the quality assurance environment.

It is helpful to write INFO-log messages at the start and end of job steps. This behavior can easily be refactored into a parent class, hence messages will be consistently written and not forgotten. If an error occurs, this information helps to prequalify the issue by support staff that may deduct the faulty job step from the message. ERROR- and FATAL-log entries should record the full exception stack to provide the developer with as much information as possible.

The log entries should be filterable by the unique order identifier. Otherwise it is difficult to keep the overview for an order state, as the entries are mingled with other orders in a parallel execution scenario.

8. Fault tolerance by branching

In a lot of cases it’s legitimate to execute a job with gaps or assumptions for missing data as opposed to quitting it with an error state. Whether jobs are permitted with different end states is dependent on the requirements for data quality in the overall system context. It might be allowed to provide e.g. partially empty metadata fields if they can be highlighted, reviewed and corrected in downstream systems. If partially filled orders are allowed there should be an accurate specification which fields are absolutely mandatory and which might be neglected to decide between rejection and partial execution.

If an order might result in different end states it is recommended to design each branch as a separate job step that can be arranged in different process models. With quality gates at the beginning or end of job steps the different execution branches can be activated or deactivated via configuration. This way the degree of error tolerance can be controlled for the whole information flow depending on the maturity of the individual process parts.